2/ Query understanding is the life blood of #searchengines. Large search engines spent millions of SWE hours building various signals like synonymy, spelling, term weighting, compounds, etc.

We don’t have that luxury.  Fortunately for us, #LLMs are here to build upon.

Fortunately for us, #LLMs are here to build upon.

Fortunately for us, #LLMs are here to build upon.

Fortunately for us, #LLMs are here to build upon. 3/ We solve the problem of #query similarity: when 2 user queries looking for the same information on the web.

Why is this useful? Query-click data for web docs = strongest signal for search, QA, etc.; solving query equivalence => smear click signal over lots of user queries

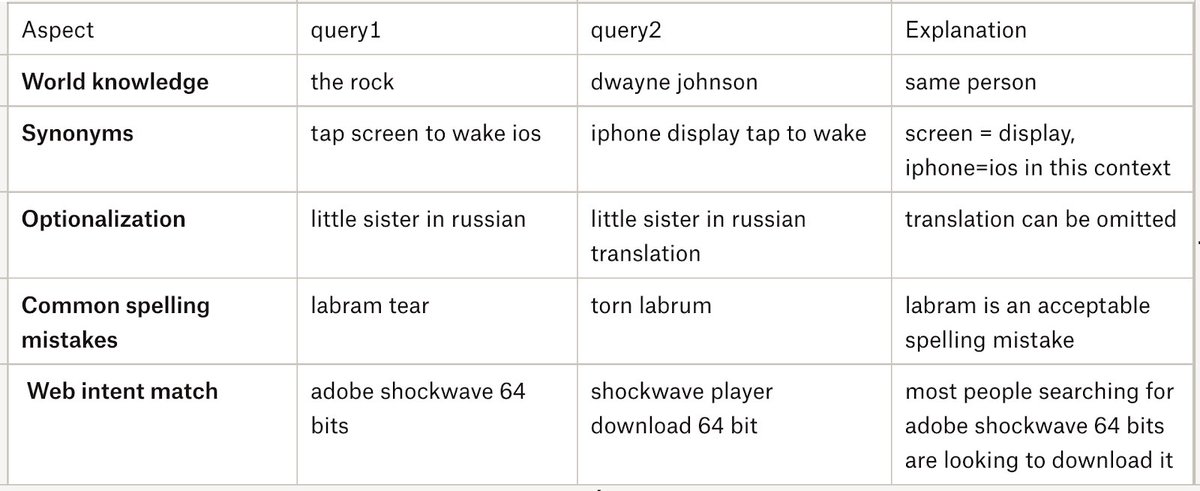

4/ Not so obvious? Query equivalence is a suitcase problem.  Once unpacked, it involves solving many semantic understanding problems.

Most importantly, it involves understanding the myriad ways in which people talk to #searchengines.

Once unpacked, it involves solving many semantic understanding problems.

Most importantly, it involves understanding the myriad ways in which people talk to #searchengines.

Once unpacked, it involves solving many semantic understanding problems.

Most importantly, it involves understanding the myriad ways in which people talk to #searchengines.

Once unpacked, it involves solving many semantic understanding problems.

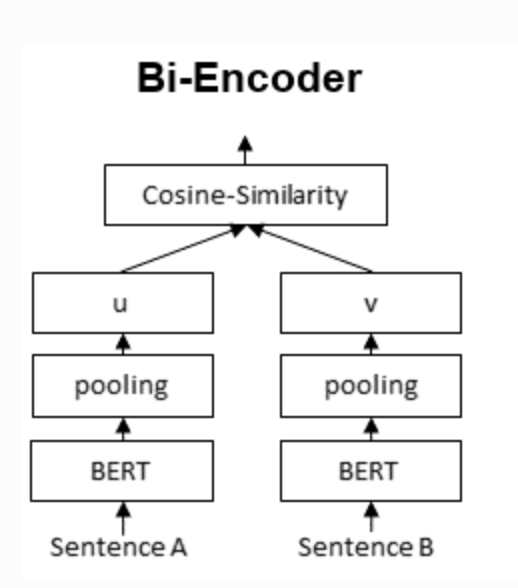

Most importantly, it involves understanding the myriad ways in which people talk to #searchengines. 5/ We use a #BERT model to encode queries in a 384 dimension space and use dot products in this space to compute a query equivalence score.

We use sentence BERT (sbert.net) as a starting point.

The main question is how do you train this model?

6/ Answer: We use a trick to generate training data.

We created query pairs that have overlapping results in their top 5 and generated a “soft label” for query pairs similarity = #{overlapping results in top 5}/5 (labels = 0.0, 0.2, 0.4, 0.6, 0.8, 1.0).

7/ Now we have trained a biencoder model, minimizing the l2 distance between soft labels and the cosine similarity of queries.

8/ Mining hard negatives is one of the trickiest and noisiest aspects of similarity/contrastive learning. Our trick lets us get around this by using soft labels based on web result overlap.

Bonus! Our predicted similarities end up being more calibrated in the [0,1] range.

Bonus! Our predicted similarities end up being more calibrated in the [0,1] range.

Bonus! Our predicted similarities end up being more calibrated in the [0,1] range.

Bonus! Our predicted similarities end up being more calibrated in the [0,1] range.9/ Training this model on our soft label data creates a state of the art model. All by using domain specific knowledge and lots of labeled data.

10/ We are releasing our model (huggingface.co/neeva/query2qu…) and golden set used for eval (huggingface.co/datasets/neeva…) on @Hugging Face.

Take a look at our latest blog post for more information

neeva.com/blog/state-of-…

neeva.com/blog/state-of-…

neeva.com/blog/state-of-…

neeva.com/blog/state-of-…

Neeva

@Neeva

Bias free, tracking free search. Free from corporate influence.

Neeva, the honest search engine. https://t.co/izGoa5OMj6

Missing some tweets in this thread? Or failed to load images or videos? You can try to .

Keep reading to find out how...

Keep reading to find out how...