Here we go!

Microsoft introduces a multimodal large language model called Kosmos-1.

Achieves great performance on language understanding, OCR-free NLP, perception-language tasks, visual QA, and more.

Take a look at some of the examples of generations from the Kosmos-1 model that can perceive general modalities. Would love to see how something like this might do with more data-specific visuals like charts, etc.

More examples demonstrating visual dialogue capabilities.

Okay, I have to admit this one caught my attention. Results on the Raven IQ test.

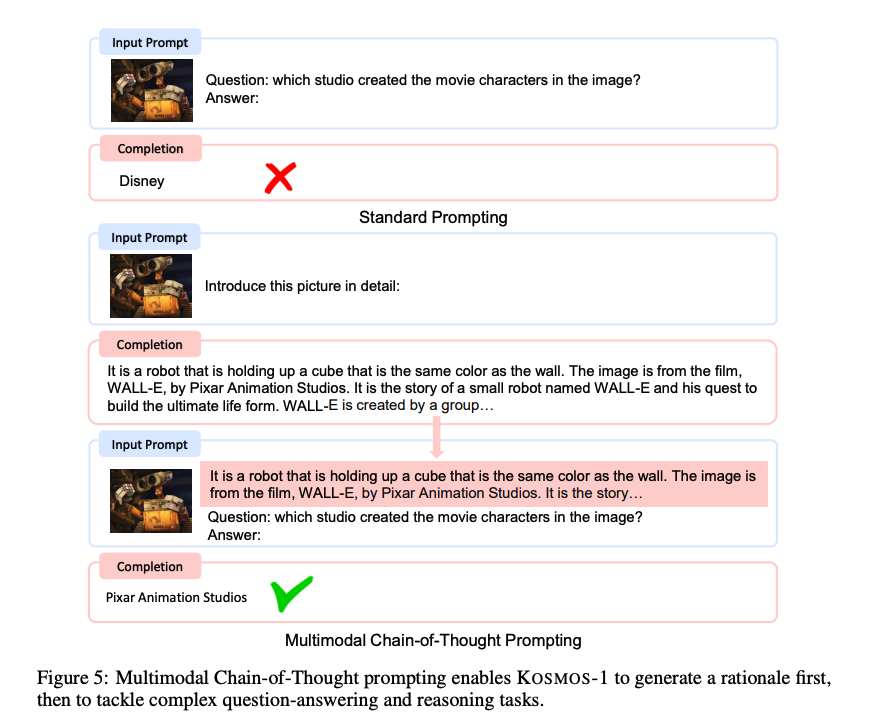

And of course, multimodal chain-of-thought prompting which allows for dealing with complex question-answering and reasoning tasks.

And before I forget, the paper: arxiv.org/abs/2302.14045

elvis

@omarsar0

Machine Learning & NLP Research • PhD • Building @dair_ai • Previously: Meta AI, Elastic

Missing some tweets in this thread? Or failed to load images or videos? You can try to .