Pete Florence@peteflorence

Mar 10, 2023

20 tweets

Today we share more on PaLM-E! (palm-e.github.io)

Thread  with blog post link at the end.

PaLM-E can do a lot of things across robotics, vision, and language… but let’s look at a few capabilities in detail, step by step

with blog post link at the end.

PaLM-E can do a lot of things across robotics, vision, and language… but let’s look at a few capabilities in detail, step by step

with blog post link at the end.

PaLM-E can do a lot of things across robotics, vision, and language… but let’s look at a few capabilities in detail, step by step

with blog post link at the end.

PaLM-E can do a lot of things across robotics, vision, and language… but let’s look at a few capabilities in detail, step by step

Danny Driess@DannyDriess

Mar 07 23

View on Twitter

What happens when we train the largest vision-language model and add in robot experiences?

The result is PaLM-E

, a 562-billion parameter, general-purpose, embodied visual-language generalist - across robotics, vision, and language.

Website: palm-e.github.io

, a 562-billion parameter, general-purpose, embodied visual-language generalist - across robotics, vision, and language.

Website: palm-e.github.io

, a 562-billion parameter, general-purpose, embodied visual-language generalist - across robotics, vision, and language.

Website: palm-e.github.io

, a 562-billion parameter, general-purpose, embodied visual-language generalist - across robotics, vision, and language.

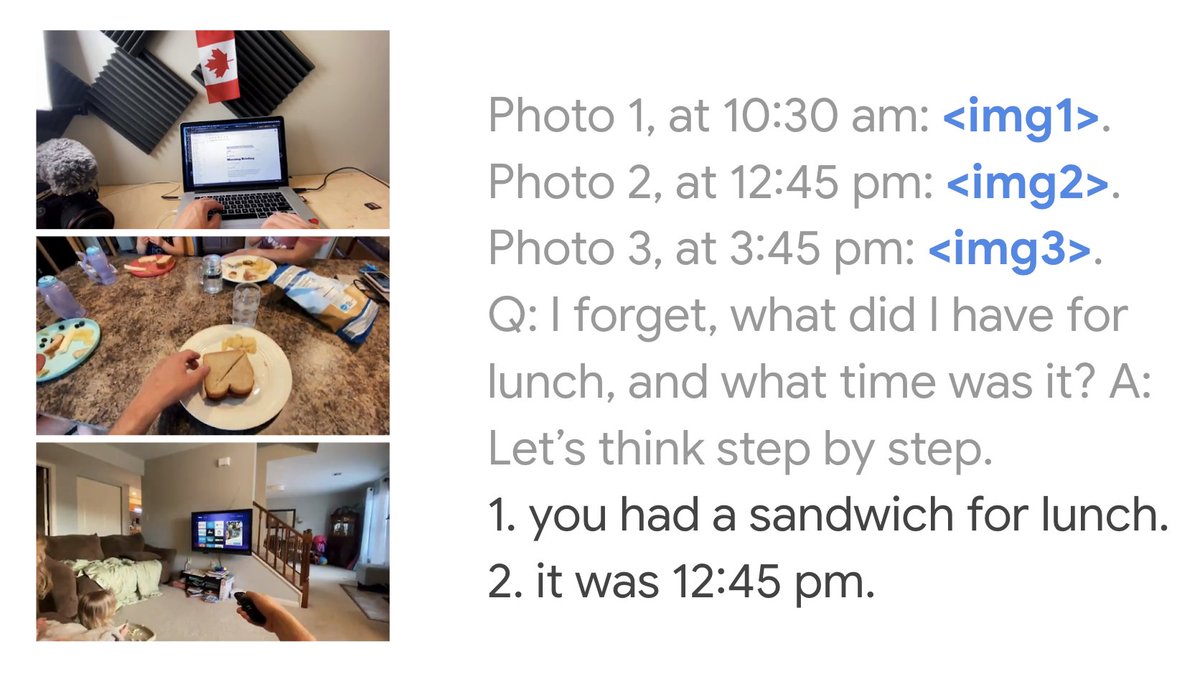

Website: palm-e.github.io For one, “Let’s think step by step” comes to multimodal models!

Zero-shot chain-of-thought has been one of these emergent behaviors that has caught considerable interest in researching LLM capabilities…

With PaLM-E-562B, zero-shot visual chain-of-thought comes “included”.

Multimodal chain-of-thought can be very helpful to get a sense of what the model is picking up on.

While the question here is only a 1-bit (yes/no) answer, the chain-of-thought provides much more than 1 bit of information on what the model sees.

Here’s a many-step zero-shot CoT example (prompt by @Ayzaan Wahid!). Note large VQA training datasets (VQAv2, OKVQA, etc.) typically only have 1-, 2-, 3-word answers, so these many-step answers are considerably out-of-distribution.

Here’s another multimodal reasoning question addressed with chain-of-thought, this time doing visual math questions, no OCR required despite needing spatial-textual context, just does everything all in one model. This prompt by @Fei Xia!

Moving on from chain-of-thought, another capability of PaLM-E that “just comes included” is the ability to do multi-image reasoning… despite only ever being trained on single-image examples.

For this multi-image reasoning, since PaLM-E flexibly supports multimodal sentences, it can answer questions about specific relationships between images. While the previous example was a “what matches?” question, this one is a “what’s different?” question.

Extending multi-image further, we can do more than just 2 images...

For this, let’s look at a capability we showed last year with Socratic Models (socraticmodels.github.io, led by @Andy Zeng), where we could do long-form egocentric video understanding, some examples here:

In Socratic Models, this worked by writing out a language-based world-state history – a timestamped log of textually-represented events:

With PaLM-E, we can do this end-to-end, all in one model, with no explicit textual intermediate stage.

A wide set of temporal/visual reasoning capabilities are in scope.

Lots of potential AR & Robotics applications here.

Quantitatively, PaLM-E-562B sets a new state-of-the-art, 66.1, on OK-VQA dataset.

This number is also achieved with a *generalist* (one model), also trained on diverse robotics and vision data, and without a final task-specific finetuning stage on OK-VQA.

In a recent-ish podcast (recorded in October, released in January), I had a few comments on where large-scale multimodal models are headed and “one big model” approach... (see around 42 minutes here)

twitter.com/gradientpub/st

The Gradient (sigmoid.social/@thegradient)@gradientpub

Jan 06 23

View on Twitter

Check out our interview with Google's @Pete Florence!

We chat about how robotics can benefit from dense visual representations, neural radiance fields, and large language models.

It's an exciting time for robotics, take a listen!  thegradientpub.substack.com/p/pete-florenc

thegradientpub.substack.com/p/pete-florenc

thegradientpub.substack.com/p/pete-florenc

thegradientpub.substack.com/p/pete-florencInteresting to look back at that interview now – finishing out the results of PaLM-E has definitely shifted my perspective!

(btw, thanks @The Gradient (sigmoid.social/@thegradient) + @sigmoid.social/@andrey for having me on!)

Another capability of PaLM-E-562B is that it's, quantitatively, an excellent language model. Roughly as good as PaLM-540B.

Notable that scaling the model significantly reduces catastrophic language forgetting  twitter.com/DannyDriess/st

twitter.com/DannyDriess/st

twitter.com/DannyDriess/st

twitter.com/DannyDriess/st

Danny Driess@DannyDriess

Mar 07 23

View on Twitter

We observe a notable trend with model scale: the larger the language model, the more it maintains its language capabilities when training on visual-language and robotics tasks – quantitatively, the 562B PaLM-E model nearly retains all of its language capabilities.

But of course, avoiding forgetting is a low bar :)

I’ve been glad to see that folks are picking up on the **transfer** story of PaLM-E – for example see r/MachineLearning:

reddit.com/r/MachineLearn

For robotics, PaLM-E is a rapid learner of new planning tasks, requiring only a handful of samples to start generalizing well in a given domain. Here we plot PaLM-E sample complexity relative to baseline – the difference is solely transfer learning. (Subset of Table 2)

PaLM-E can do few-shot and zero-shot generalization – it never had training data for “push the red blocks to the coffee cup”, and only had ever seen this coffee cup in 3 images. See website for the never-before-seen “turtle” object too.

Towards wrapping up here, in addition to all our co-authors, I want to especially give a shout-out and thanks to all the Google teams who helped make the effort possible! Especially the folks behind training PaLM and the large ViTs from which PaLM-E is built.

Here is the link for the blog post:

twitter.com/GoogleAI/statu

Google AI@GoogleAI

Mar 10 23

View on Twitter

Today we share PaLM-E, a generalist, embodied language model for robotics. The largest instantiation, 562 billion parameters, is also a state-of-the-art visual-language model, has PaLM’s language skills, and can be successfully applied across robot types →goo.gle/3JsszmK

And I want to close with a Haiku.

Prompt in gray by @Brian Ichter, and the completion written by PaLM-E-562B:

Pete Florence

@peteflorence

Research Scientist @GoogleAI // research on robotics + AI // PhD @MIT_CSAIL

Missing some tweets in this thread? Or failed to load images or videos? You can try to .