Zeyuan Allen-Zhu, Sc.D.@ZeyuanAllenZhu

May 3

8 tweets

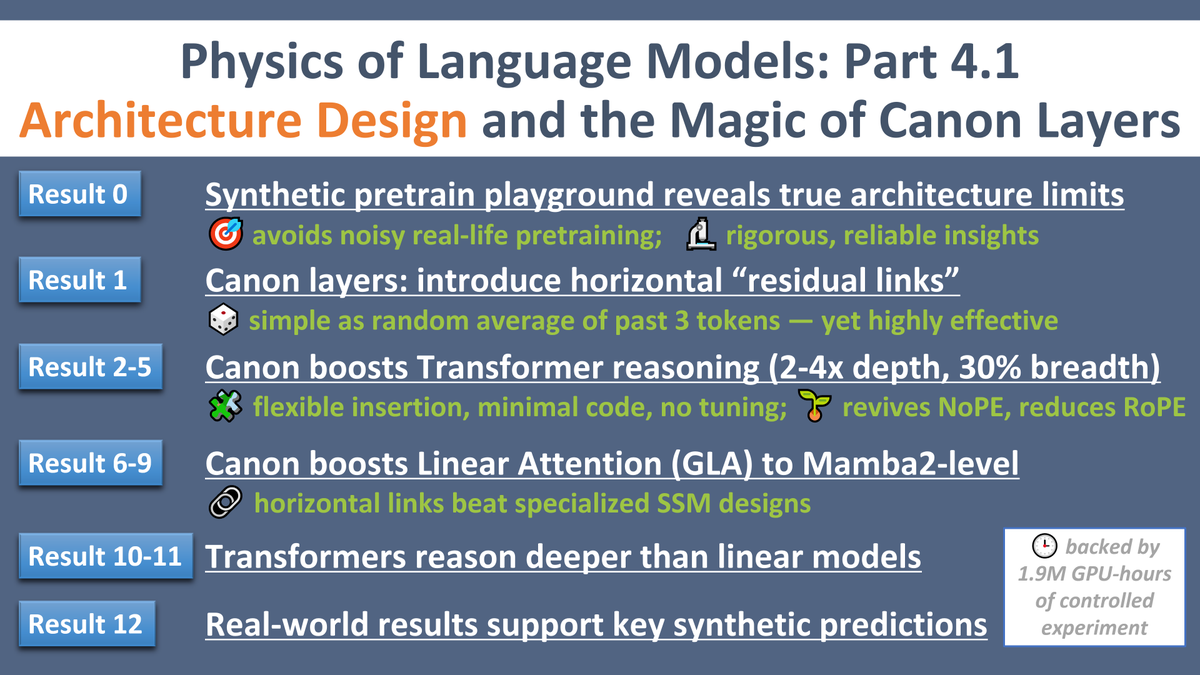

(1/8) A Galileo moment for LLM design

A Galileo moment for LLM design As Pisa Tower experiment sparked modern physics, our controlled synthetic pretraining playground reveals LLM architectures' true limits. A turning point that might divide LLM research into "before" and "after." physics.allen-zhu.com/part-4-archite

As Pisa Tower experiment sparked modern physics, our controlled synthetic pretraining playground reveals LLM architectures' true limits. A turning point that might divide LLM research into "before" and "after." physics.allen-zhu.com/part-4-archite

A Galileo moment for LLM design

A Galileo moment for LLM design As Pisa Tower experiment sparked modern physics, our controlled synthetic pretraining playground reveals LLM architectures' true limits. A turning point that might divide LLM research into "before" and "after." physics.allen-zhu.com/part-4-archite

As Pisa Tower experiment sparked modern physics, our controlled synthetic pretraining playground reveals LLM architectures' true limits. A turning point that might divide LLM research into "before" and "after." physics.allen-zhu.com/part-4-archite (2/8) Real-life pretraining at repeatable academic scale (100B tokens): architectural differences vanish in noise. Our synthetic playground changes the game:

Clear trends (e.g., 2x reasoning depth)

Clear trends (e.g., 2x reasoning depth)

Early emergence of advanced skills

Early emergence of advanced skills

High-quality data predicts future designs

High-quality data predicts future designs

Clear trends (e.g., 2x reasoning depth)

Clear trends (e.g., 2x reasoning depth)

Early emergence of advanced skills

Early emergence of advanced skills

High-quality data predicts future designs

High-quality data predicts future designs (3/8) We design 5 synthetic pretrain tasks to isolate atomic skills, ensuring:

True mental reasoning ("system-1"), not just CoT

True mental reasoning ("system-1"), not just CoT

Short (4k) contexts—where real models actually think

Short (4k) contexts—where real models actually think

No toy tasks—real insights into architectural limits

No toy tasks—real insights into architectural limits

True mental reasoning ("system-1"), not just CoT

True mental reasoning ("system-1"), not just CoT

Short (4k) contexts—where real models actually think

Short (4k) contexts—where real models actually think

No toy tasks—real insights into architectural limits

No toy tasks—real insights into architectural limits (4/8) We introduce Canon layers (named after musical Canon )—lightweight horizontal residuals. Simple (average 3 past tokens), plug into any model, yet transformative:

)—lightweight horizontal residuals. Simple (average 3 past tokens), plug into any model, yet transformative:

Boosts reasoning (2-4x depth, 30% breadth)

Boosts reasoning (2-4x depth, 30% breadth)

Minimal overhead & flexible integration

Minimal overhead & flexible integration

Tiny, no tuning

Tiny, no tuning

)—lightweight horizontal residuals. Simple (average 3 past tokens), plug into any model, yet transformative:

)—lightweight horizontal residuals. Simple (average 3 past tokens), plug into any model, yet transformative:

Boosts reasoning (2-4x depth, 30% breadth)

Boosts reasoning (2-4x depth, 30% breadth)

Minimal overhead & flexible integration

Minimal overhead & flexible integration

Tiny, no tuning

Tiny, no tuning (5/8) Canon layers revive NoPE!

No positional embeddings? Canon makes it match or even beat RoPE

No positional embeddings? Canon makes it match or even beat RoPE

Best of both worlds: top reasoning + great length generalization

Best of both worlds: top reasoning + great length generalization

Works across attention, MLP & boosts even MoE capacity

Works across attention, MLP & boosts even MoE capacity

Safe, stable, plug-and-play

Safe, stable, plug-and-play

No positional embeddings? Canon makes it match or even beat RoPE

No positional embeddings? Canon makes it match or even beat RoPE

Best of both worlds: top reasoning + great length generalization

Best of both worlds: top reasoning + great length generalization

Works across attention, MLP & boosts even MoE capacity

Works across attention, MLP & boosts even MoE capacity

Safe, stable, plug-and-play

Safe, stable, plug-and-play (6/8) Linear attention is fast—but traditionally weak. Canon layers fix that:

Now matching or beating Mamba2

Now matching or beating Mamba2

4× reasoning depth, 100%+ breadth, 50%+ manipulation length

4× reasoning depth, 100%+ breadth, 50%+ manipulation length

Safe, easy & efficient: residual-only, no activation, minimal overhead

Safe, easy & efficient: residual-only, no activation, minimal overhead

Now matching or beating Mamba2

Now matching or beating Mamba2

4× reasoning depth, 100%+ breadth, 50%+ manipulation length

4× reasoning depth, 100%+ breadth, 50%+ manipulation length

Safe, easy & efficient: residual-only, no activation, minimal overhead

Safe, easy & efficient: residual-only, no activation, minimal overhead (7/8) Did you know? Mamba's strength largely comes from a hidden "conv1d" layer—like Canon, but weaker—not its SSM.

Remove it? Mamba drops to linear attention.

Remove it? Mamba drops to linear attention.

Replace w/ full Canon? Beats original Mamba.

Replace w/ full Canon? Beats original Mamba.

Canon works even outside SSMs, revealing what really matters.

Canon works even outside SSMs, revealing what really matters.

Remove it? Mamba drops to linear attention.

Remove it? Mamba drops to linear attention.

Replace w/ full Canon? Beats original Mamba.

Replace w/ full Canon? Beats original Mamba.

Canon works even outside SSMs, revealing what really matters.

Canon works even outside SSMs, revealing what really matters. (8/8)  With Canon layers, Transformers still outperform linear models in deep reasoning (4× depth)

With Canon layers, Transformers still outperform linear models in deep reasoning (4× depth)

Linear models are limited by compression & retrieval—not memory

Linear models are limited by compression & retrieval—not memory

Real-life pretraining adds noise, hides differences

Real-life pretraining adds noise, hides differences

Synthetic playground pinpoints what actually matters

Synthetic playground pinpoints what actually matters

With Canon layers, Transformers still outperform linear models in deep reasoning (4× depth)

With Canon layers, Transformers still outperform linear models in deep reasoning (4× depth)

Linear models are limited by compression & retrieval—not memory

Linear models are limited by compression & retrieval—not memory

Real-life pretraining adds noise, hides differences

Real-life pretraining adds noise, hides differences

Synthetic playground pinpoints what actually matters

Synthetic playground pinpoints what actually matters

Zeyuan Allen-Zhu, Sc.D.

@ZeyuanAllenZhu

physics of language models @ Meta (FAIR, not GenAI)

🎓:Tsinghua Physics — MIT — Princeton/IAS

🏅:IOI x 2 — ACM-ICPC — USACO — Codejam — math MCM

Missing some tweets in this thread? Or failed to load images or videos? You can try to .